mirror of

https://github.com/oceanprotocol/docs.git

synced 2024-11-01 15:55:34 +01:00

3.8 KiB

3.8 KiB

| title | section | description |

|---|---|---|

| Compute Workflow | developers | Understanding the Compute-to-Data (C2D) Workflow |

🚀 Now that we've introduced the key actors and provided an overview of the process, it's time to delve into the nitty-gritty of the compute workflow. We'll dissect each step, examining the inner workings of Compute-to-Data (C2D). From data selection to secure computations, we'll leave no stone unturned in this exploration.

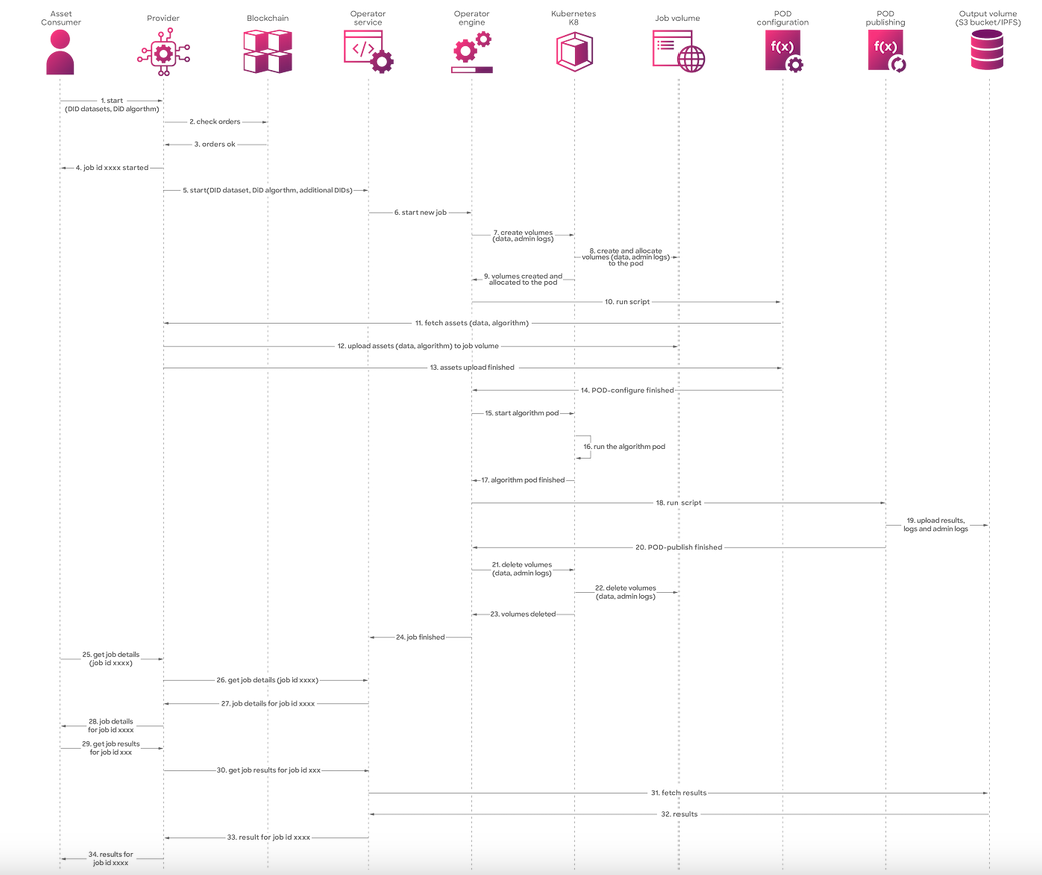

For visual clarity, here's an image of the workflow in action! 🖼️✨

Compute detailed flow diagram

Below, we'll outline each step in detail 📝

Starting a C2D Job

- The consumer initiates a compute-to-data job by selecting the desired data asset and algorithm.

- The provider checks the orders on the blockchain.

- If the orders are valid, the provider can commence the compute flow.

- The provider informs the consumer of the job number's successful creation.

- With the job ID and confirmation of the orders, the consumer starts the job by calling the operator service.

- The operator service communicates with the operator engine to initiate a new job.

Creating the K8 Cluster and Allocating Job Volumes

- As a new job begins, volumes are created on the Kubernetes cluster, a task handled by the operator engine.

- The cluster creates and allocates volumes for the job using the job volumes

- The volumes are created and allocated to the pod

- After volume creation and allocation, the operator engine initiates the

run scripton the pod configuration.

Loading Assets and Algorithms

- The pod configuration requires the data asset and algorithm, prompting a request to the provider for retrieval.

- The provider uploads assets to the allocated job volume.

- Upon completion of file uploads, the provider notifies the pod configuration that the assets are ready for the job.

- The pod configuration informs the operator engine that it's ready to start the job.

Running the Algorithm on Data Asset(s)

- The operator engine launches the algorithm pod on the Kubernetes cluster.

- Kubernetes runs the algorithm pod.

- When the algorithm completes processing the dataset, the operator engine receives confirmation.

- Now that the results are available, the operator engine runs the script on the pod publishing component.

- The pod publishing uploads the results, logs, and admin logs to the output volume.

- Upon successful upload, the operator engine receives notification from the pod publishing, allowing it to clean up the job volumes.

Cleaning Up Volumes and Allocated Space

- The operator engine deletes the K8 volumes.

- The Kubernetes cluster removes all used volumes.

- Once volumes are deleted, the operator engine finalizes the job.

- The operator engine informs the operator service that the job is completed, and the results are now accessible.

Retrieving Job Details

- The consumer retrieves job details by calling the provider's

get job details. - The provider communicates with the operator service to fetch job details.

- The operator service returns the job details to the provider.

- With the job details, the provider can share them with the asset consumer.

Retrieving Job Results

- Equipped with job details, the asset consumer can retrieve the results from the recently executed job.

- The provider engages the operator engine to access the job results.

- As the operator service lacks access to this information, it uses the output volume to fetch the results.

- The output volume provides the stored job results to the operator service.

- The operator service shares the results with the provider.

- The provider then delivers the results to the asset consumer.