2.8 KiB

GitHub Data Fetching

Overview

Currently, there are three ways of getting data from GitHub to construct various parts of the docs site:

- as external content files via Git submodules of selected repos, on build time

- via GitHub's GraphQL API v4, on build time

- via GitHub's FETCH API v3, on run time

GitHub GraphQL API

The GitHub GraphQL API integration is done through gatsby-source-graphql and requires authorization.

An environment variable GITHUB_TOKEN needs to present, filled with a personal access token with the scope public_repo.

For local development, you can simply create a personal access token and use it in your local .env file:

cp .env.sample .env

vi .env

# GITHUB_TOKEN=add_your_token_here

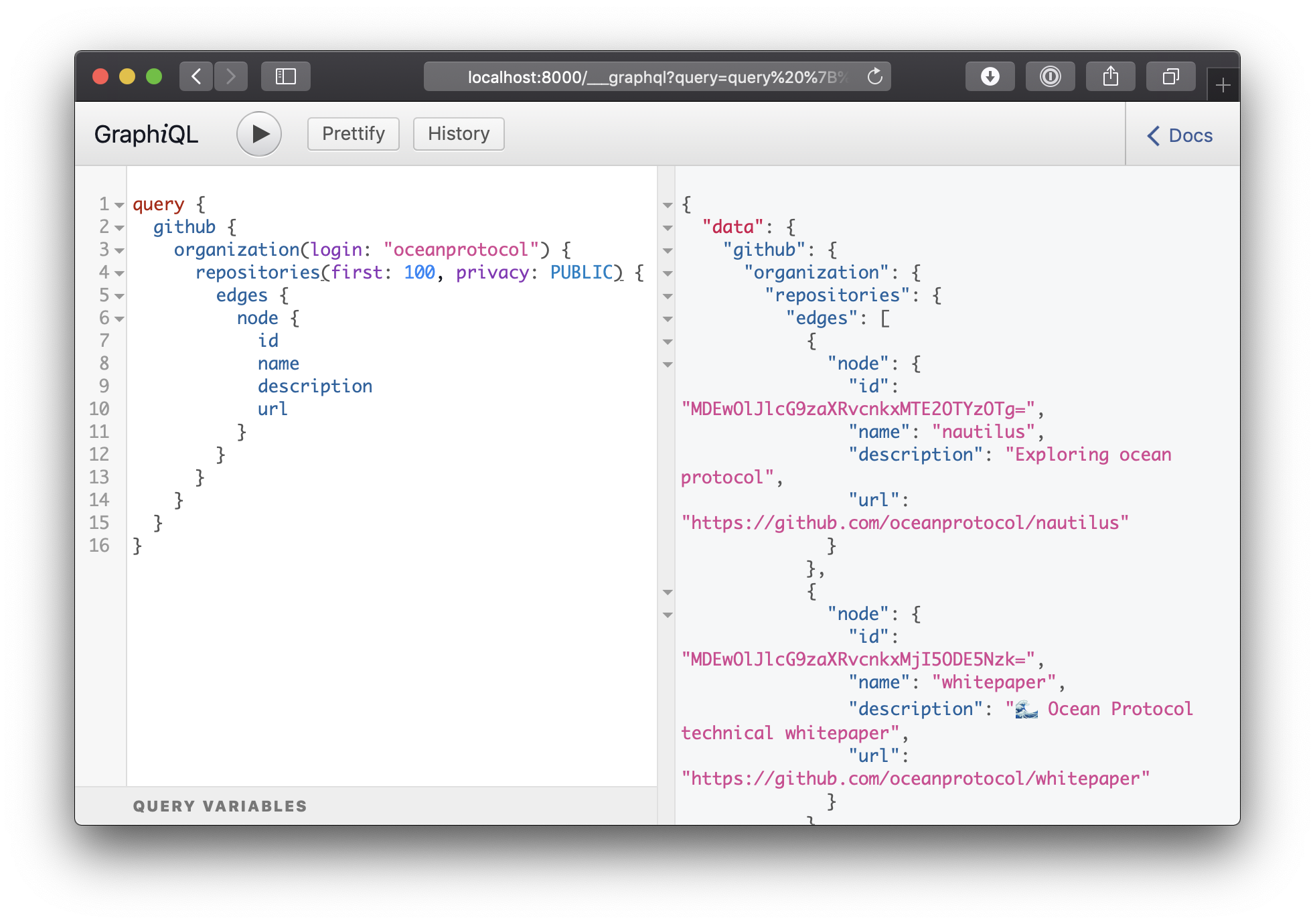

When running the site locally, you can use the GraphiQL client running under localhost:8000/___graphql to explore the whole GraphQL layer of the site (not just the GitHub infos).

This query should get you started to explore what information you can get from GitHub. All that is described in GitHub GraphQL API can be used :

query {

github {

organization(login: "oceanprotocol") {

repositories(first: 100) {

edges {

node {

name

description

url

}

}

}

}

}

}

GitHub REST API

The GitHub GraphQL API is only queried on build time, further GitHub updates on client side need to be done through additional fetch API calls. At the moment this is done for the repositories component, where the stars and forks numbers are updated on client-side.

We use github-projects for all communications with the GitHub REST API v3, deployed on Now.

This microservice should be used for all client-side integrations for performance and security reasons, required changes in data structure should be done over there. This service does data refetching automatically, caches results for 15min, and it has access to a secret GitHub token for making authorized API calls.

As a next step, using the REST API could be made obsolete by using some GraphQL client like Apollo to query GitHub's GraphQL API on run time too.